Understanding the EU AI Act: Key Regulations and Implications for Businesses

The European Union’s Artificial Intelligence Act (EU AI Act) represents the world’s first comprehensive regulatory framework for artificial intelligence. As businesses increasingly adopt AI technologies, understanding this landmark legislation is crucial for ensuring compliance and avoiding significant penalties. This article breaks down the key provisions of the EU AI Act, outlines compliance requirements, and provides actionable steps to help your organization prepare for this new regulatory landscape.

What is the EU AI Act?

The EU AI Act is a European regulation on artificial intelligence—the first comprehensive AI regulation by a major regulator globally. Formally adopted in 2024, the Act takes a risk-based approach to regulating AI systems, applying different rules according to the level of risk they pose to safety, fundamental rights, and democratic values.

The legislation aims to promote the development of human-centric, trustworthy AI while addressing potential risks. Similar to how the General Data Protection Regulation (GDPR) became a global standard for data protection, the EU AI Act is expected to influence AI governance worldwide, affecting companies both within and outside the European Union.

Scope and Application

The EU AI Act applies to various operators in the AI value chain, including:

- Providers: Organizations that develop AI systems or have them developed on their behalf

- Deployers: Organizations that use AI systems in their operations

- Importers: Entities that bring AI systems from outside the EU into the European market

- Distributors: Businesses that make AI systems available on the EU market

- Product manufacturers: Companies that integrate AI systems into their products

Importantly, the Act applies to organizations outside the EU if their AI systems or the outputs of these systems are used within the EU. This extraterritorial scope means global businesses must pay attention to these regulations even if they don’t have a physical presence in Europe.

The Four Risk Categories

The EU AI Act classifies AI systems into four risk categories, with different requirements for each level:

1. Unacceptable Risk (Prohibited Practices)

AI systems that pose an unacceptable risk to people’s safety, livelihoods, and rights are prohibited outright under the EU AI Act.

- Social scoring systems that evaluate individuals based on social behavior, leading to detrimental treatment in unrelated contexts

- Emotion recognition systems in workplaces and educational institutions (except for medical or safety purposes)

- Untargeted scraping of facial images from the internet or CCTV for facial recognition databases

- Biometric identification systems that categorize individuals based on sensitive characteristics

- Predictive policing applications that make predictions about individuals

- AI systems that manipulate human behavior to circumvent free will

2. High-Risk AI Systems

High-risk AI systems are those that pose significant risks to health, safety, or fundamental rights. These systems are permitted but subject to strict requirements.

- Critical infrastructure management (water, gas, electricity)

- Educational and vocational training systems that determine access to education or evaluate students

- Employment-related systems for recruitment, promotion, or task allocation

- Essential private and public services including credit scoring and access to public benefits

- Law enforcement applications not classified as prohibited

- Migration and border control management

- Administration of justice and democratic processes

3. Limited Risk AI Systems

Limited risk AI systems must meet specific transparency requirements but aren’t subject to the strict obligations of high-risk systems.

- Chatbots and virtual assistants must disclose that users are interacting with an AI system

- Emotion recognition systems (in permitted contexts) must inform subjects they’re being analyzed

- AI-generated or manipulated content (“deepfakes”) must be clearly labeled as artificially created

- AI systems that influence user decisions must provide clear information about this influence

4. Minimal Risk AI Systems

The vast majority of AI systems fall into this category and are subject to minimal or no regulation under the EU AI Act.

- AI-powered spam filters

- Video games with AI components

- Inventory management systems

- Basic recommendation systems (with certain limitations)

- AI-enabled productivity tools

Assess Your AI Systems Risk Level

Unsure which risk category your AI systems fall into? Our comprehensive risk assessment template helps you evaluate your AI applications against the EU AI Act criteria.

Compliance Requirements for High-Risk AI Systems

High-risk AI systems face the most stringent requirements under the EU AI Act. Organizations developing or deploying these systems must implement robust governance frameworks and technical measures.

Risk Management System

Providers must implement a continuous risk management system throughout the AI system’s lifecycle. This includes:

- Identifying and analyzing known and foreseeable risks

- Estimating and evaluating risks that may emerge during operation

- Adopting risk mitigation measures

- Documenting all risk management activities

Data Governance

The EU AI Act places significant emphasis on data quality and governance, requiring:

- Training, validation, and testing data that is relevant, representative, and free from errors

- Data governance practices addressing potential biases

- Examination of possible data availability and quality issues

- Detailed documentation of data sources and processing methods

Technical Documentation

Comprehensive technical documentation must be maintained, including:

- General description of the AI system and its intended purpose

- Detailed information about system architecture, algorithms, and data

- Description of the risk management system

- Changes to the system throughout its lifecycle

- Validation and testing procedures

Record-Keeping and Traceability

High-risk AI systems must maintain logs of their activity to ensure traceability and facilitate post-market monitoring:

- Automatic recording of events during operation

- Logging of periods when the system is in use

- Identification of natural persons involved in verification of outputs

- Retention of logs for an appropriate period

Transparency and User Information

Providers must ensure high-risk AI systems are accompanied by clear instructions for users, including:

- Identity and contact details of the provider

- Characteristics, capabilities, and limitations of the AI system

- Human oversight measures and their operation

- Expected lifetime of the system and maintenance measures

Human Oversight

High-risk AI systems must be designed to allow for effective human oversight, enabling humans to:

- Fully understand the system’s capabilities and limitations

- Remain aware of automation bias

- Correctly interpret the system’s output

- Decide when and how to use the system in a particular situation

- Intervene or interrupt the system through a “stop” button or similar procedure

Accuracy, Robustness, and Cybersecurity

High-risk AI systems must achieve appropriate levels of:

- Accuracy, with metrics defined and declared in the technical documentation

- Resilience against errors, inconsistencies, and attempts to manipulate the system

- Cybersecurity protections appropriate to the specific risks

Prepare for Compliance

Our EU AI Act Compliance Checklist provides a step-by-step guide to meeting all requirements for high-risk AI systems, with practical implementation tips and documentation templates.

Rules for General-Purpose AI Models

The EU AI Act includes specific provisions for general-purpose AI (GPAI) models, such as large language models that can be used across multiple applications.

Basic Requirements for All GPAI Models

- Establishing policies to respect EU copyright laws

- Creating and publishing detailed summaries of training data

- Implementing adequate cybersecurity measures

- Documenting and reporting serious incidents to authorities

Additional Requirements for Systemic Risk Models

GPAI models with “systemic risk” face stricter requirements. A model is considered to have systemic risk if:

- It has been trained with computing resources exceeding 10^25 floating point operations (FLOPs)

- It has significant market reach or potential negative effects on public health, safety, or fundamental rights

- It has been classified as posing systemic risk by the EU Commission

Providers of these models must conduct model evaluations, assess and mitigate systemic risks, ensure cybersecurity, and report serious incidents to authorities.

Penalties for Non-Compliance

The EU AI Act establishes significant penalties for non-compliance, with fines varying based on the severity of the violation and the size of the organization.

| Violation Type | Maximum Fine | Examples |

| Prohibited AI practices | €35 million or 7% of global annual turnover (whichever is higher) | Deploying social scoring systems, using prohibited biometric identification |

| Non-compliance with requirements for high-risk AI | €15 million or 3% of global annual turnover (whichever is higher) | Inadequate risk management, poor data governance, lack of human oversight |

| Providing incorrect information to authorities | €7.5 million or 1% of global annual turnover (whichever is higher) | Misleading documentation, incomplete reporting |

For small and medium-sized enterprises (SMEs) and startups, the fine is capped at the lower of the two possible amounts specified above, providing some relief for smaller organizations.

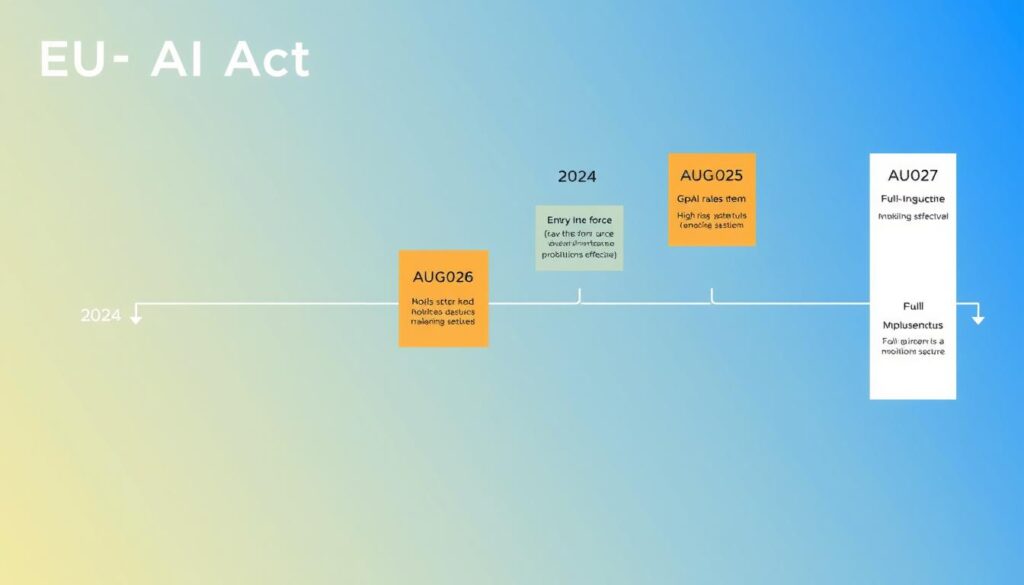

Implementation Timeline

The EU AI Act entered into force on August 1, 2024, with a phased implementation approach:

- February 2, 2025: Prohibitions on unacceptable risk AI practices take effect

- August 2, 2025: Rules for general-purpose AI models take effect for new models

- August 2, 2026: Requirements for high-risk AI systems become applicable

- August 2, 2027: Rules for AI systems that are products or safety components regulated under specific EU laws take effect

- August 2, 2027: Deadline for existing GPAI models to comply with the Act’s requirements

This phased approach gives organizations time to adapt their AI systems and governance frameworks to meet the new requirements.

Industry Impact Analysis

The EU AI Act will affect various industries differently, with some facing more significant compliance challenges than others.

Healthcare

The healthcare industry will see significant impact due to the prevalence of high-risk AI applications:

- Medical devices with AI components will generally be classified as high-risk

- Diagnostic tools must meet stringent accuracy and explainability requirements

- Patient data governance will need to comply with both the EU AI Act and GDPR

- Clinical decision support systems will require robust human oversight mechanisms

Financial Services

Financial institutions face substantial compliance requirements:

- Credit scoring systems are explicitly classified as high-risk

- Algorithmic trading platforms may require enhanced risk management

- Fraud detection systems will need to balance effectiveness with transparency

- Insurance risk assessment tools must avoid discriminatory outcomes

Technology Sector

Technology companies developing AI systems face varying levels of impact:

- GPAI model providers must comply with model-specific requirements

- Consumer product manufacturers using AI must assess risk levels

- Social media platforms using recommendation algorithms may face transparency requirements

- Cloud service providers hosting AI applications may need to support compliance efforts

Case Study: FinTech Compliance

Consider a hypothetical European fintech company, “CreditAI,” that uses artificial intelligence for credit scoring and loan approval decisions.

Pre-Compliance State

- Uses a machine learning algorithm to evaluate loan applications

- Limited documentation on data sources and model training

- Minimal human review of algorithmic decisions

- No formal risk assessment process

Compliance Actions

- Risk Classification: Identified their credit scoring system as high-risk under the EU AI Act

- Data Governance: Implemented comprehensive data quality controls and bias detection

- Documentation: Created detailed technical documentation of the AI system

- Human Oversight: Established a review process where humans can override algorithmic decisions

- Transparency: Developed clear explanations for loan applicants about how AI influences decisions

- Testing: Implemented regular accuracy and bias testing procedures

- Incident Reporting: Created a system to document and report significant incidents

Results

By proactively addressing EU AI Act requirements, CreditAI not only achieved compliance but also improved their product:

- Enhanced model accuracy through better data governance

- Reduced bias in lending decisions

- Improved customer trust through greater transparency

- Minimized regulatory risk and potential penalties

- Created a competitive advantage over less compliant competitors

Challenges for Startups and SMEs

While the EU AI Act provides some accommodations for smaller businesses, startups and SMEs still face significant challenges in achieving compliance.

Key Challenges

- Resource constraints: Limited budget and personnel for compliance activities

- Technical complexity: Difficulty implementing sophisticated risk management systems

- Documentation burden: Extensive record-keeping requirements

- Regulatory uncertainty: Evolving interpretations of the Act’s requirements

- Competitive pressure: Balancing innovation with compliance

Practical Steps for SMEs

Despite these challenges, smaller organizations can take practical steps to prepare:

- Conduct an AI inventory: Identify all AI systems in use and assess their risk level

- Prioritize high-risk systems: Focus compliance efforts on systems most likely to be classified as high-risk

- Leverage existing frameworks: Build on existing GDPR compliance measures where applicable

- Implement documentation practices: Start documenting AI systems and their development process

- Consider partnerships: Collaborate with other organizations to share compliance resources

- Monitor regulatory developments: Stay informed about evolving guidance and interpretations

- Engage with industry associations: Participate in sector-specific compliance initiatives

SME Compliance Guide

Our specialized guide for startups and SMEs provides cost-effective compliance strategies, simplified documentation templates, and a phased implementation roadmap tailored to organizations with limited resources.

Global Significance and Future Implications

The EU AI Act’s influence extends far beyond Europe’s borders, potentially shaping global AI governance in several ways:

The “Brussels Effect”

Similar to how GDPR influenced global data protection standards, the EU AI Act may create a “Brussels Effect” where:

- Companies implement EU standards globally rather than maintaining different systems

- Other jurisdictions use the EU framework as a template for their own regulations

- International standards bodies incorporate elements of the EU approach

Emerging Global Landscape

The EU AI Act is part of an evolving global regulatory landscape:

- The United States is developing a rights-based approach to AI regulation

- China has implemented regulations for recommendation algorithms and deepfakes

- Canada, Brazil, and other countries are developing their own AI governance frameworks

- International organizations like the OECD are promoting AI principles that align with EU values

Future Developments

As AI technology continues to evolve, we can expect:

- Refinements to the EU AI Act through implementing acts and guidelines

- Expansion of regulatory scope to address emerging AI capabilities

- Greater international coordination on AI governance

- Development of technical standards to support compliance

- Growth of the AI governance ecosystem, including auditing and certification services

Conclusion

The EU AI Act represents a watershed moment in AI governance, establishing a comprehensive framework that balances innovation with protection of fundamental rights. For businesses developing or using AI systems, compliance is not just about avoiding penalties—it’s about building trustworthy AI that can be sustainably deployed in the European market and beyond.

Organizations should view the implementation period as an opportunity to assess their AI systems, strengthen governance practices, and potentially gain competitive advantage through early compliance. By taking a proactive approach to the EU AI Act, businesses can not only mitigate regulatory risks but also contribute to the responsible development of AI technology.

Stay Updated on EU AI Act Developments

Regulatory guidance and interpretations of the EU AI Act continue to evolve. Subscribe to our newsletter for regular updates, expert analysis, and practical compliance tips.

Tag:AI technology in the European Union, Business compliance with EU AI Act, Ethical AI practices in the European Union, EU AI Act and innovation in tech industry, EU AI Act implications for startups, EU Artificial Intelligence regulations, EU data protection and AI, Guidelines for AI development in the EU, Impact of EU regulations on businesses, Legal obligations under the EU AI Act